Few personal notes from the "How AI Impacts Skill Formation" from Anthropic

Anthropic published a study called "How AI Impacts Skill Formation" exploring how AI assistance affects both productivity and learning when developers work with new concepts. They also published an article exploring some results from the study: "How AI assistance impacts the formation of coding skills"

Here are some notes about things I found interesting:

Study Design

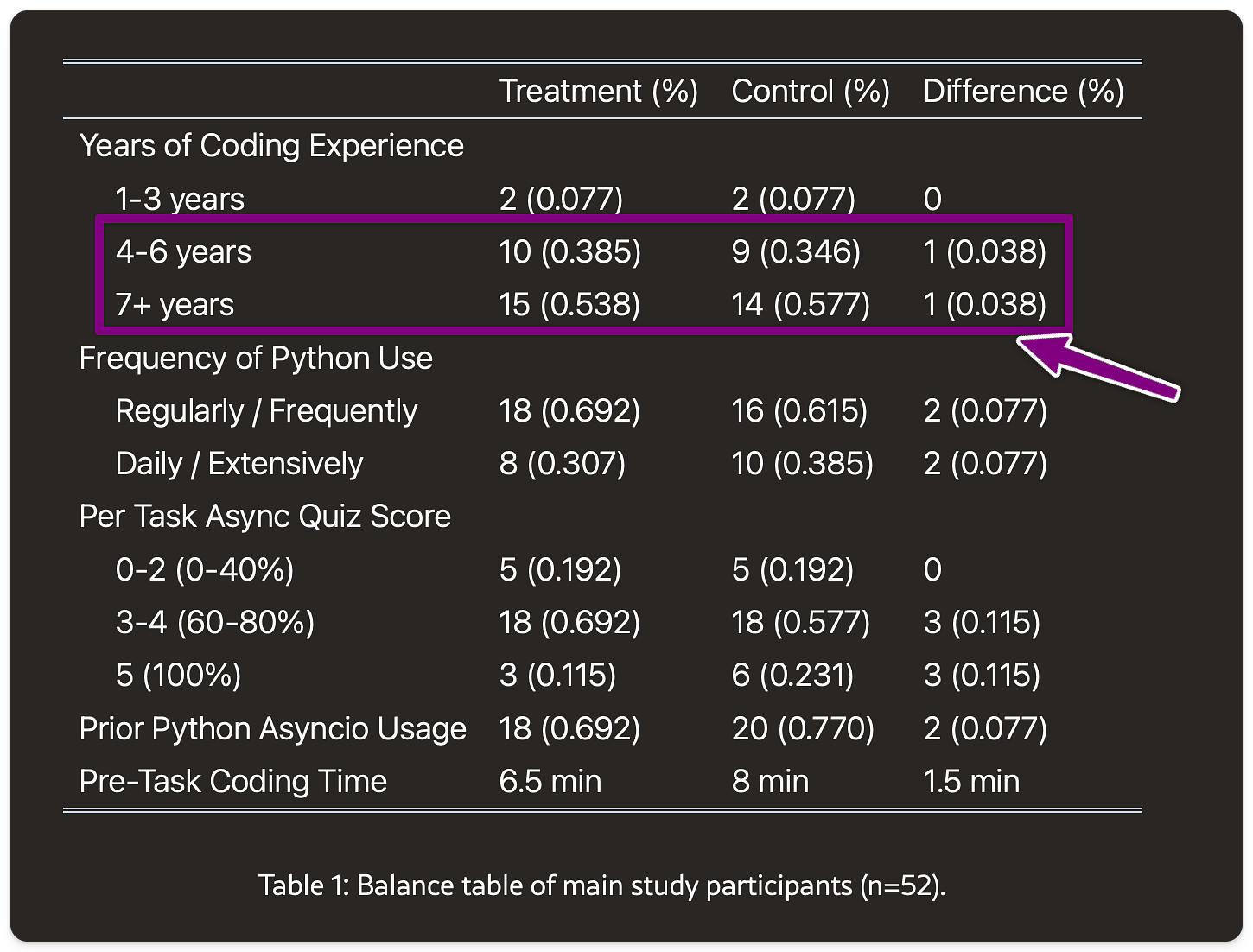

The researchers recruited 52 software engineers who were familiar with Python and had experience using AI coding assistance tools. The task involved learning Python asyncio, a library most participants hadn't used before.

One interesting detail: the article describes participants as "mostly junior" engineers, but when you look at the data, 55.8% have 7+ years of coding experience and 36.5% have 4-6 years. This matters because the findings apply more to experienced developers learning new concepts than to absolute beginners.

ChatGPT agrees with me:

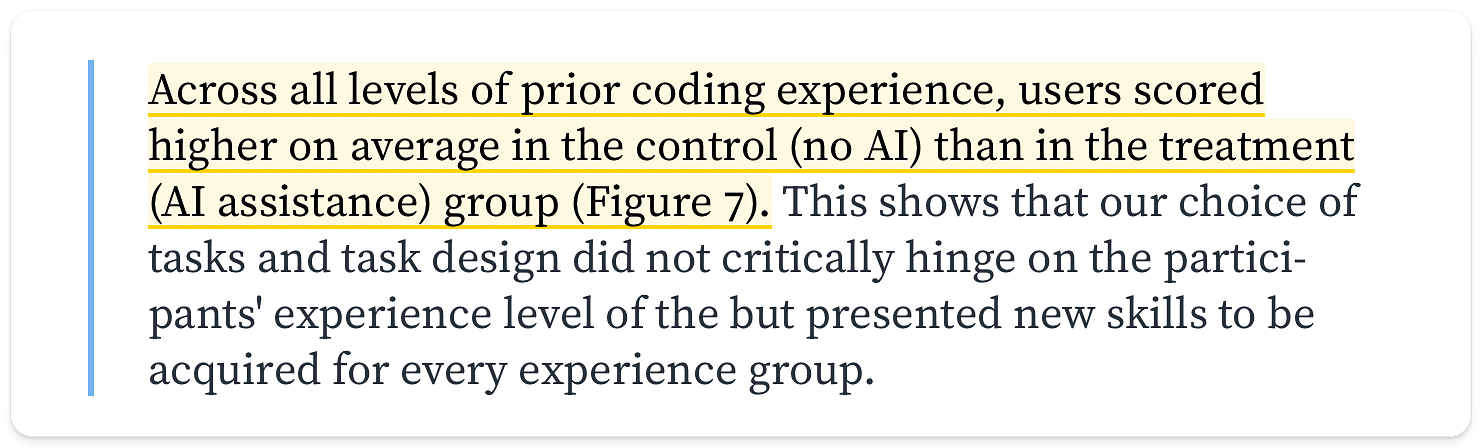

The Core Finding: AI Doesn't Automatically Help Learning

Developers who completed tasks without AI assistance scored higher on comprehension tests. Using AI to generate code doesn't automatically translate to understanding that code. This pattern held true across all experience levels.

This matches my experience. When I use AI to generate code, unless I make an intentional effort, I forget about that code very quickly. I need to actively review the code, build a mental model, and trace through the logic. Without that effort, I'm just watching the AI do something and forget about it in a couple of hours.

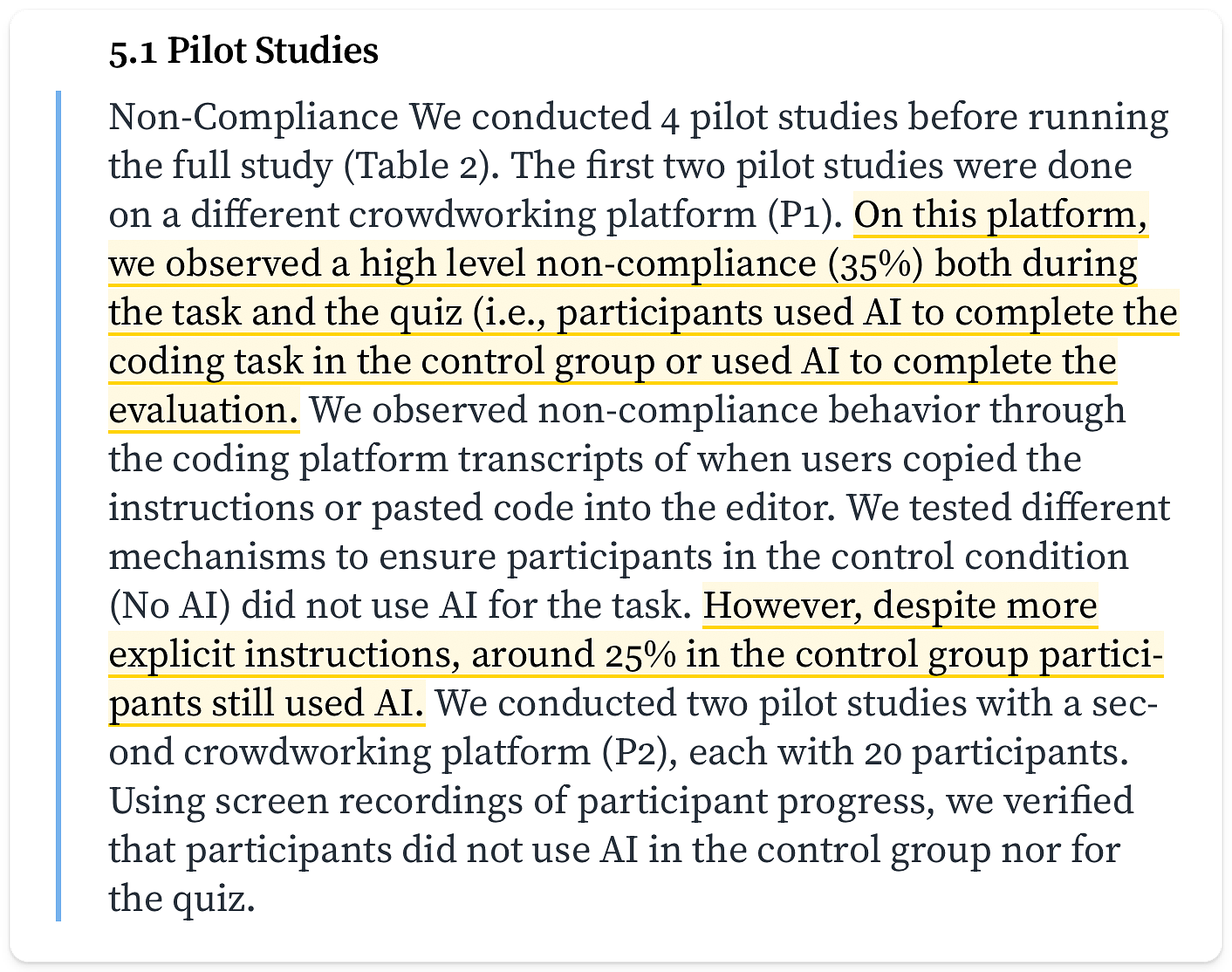

Interesting Finding from the Pilot Study

During their pilot studies, the researchers discovered something telling about how integrated AI has become in developer workflows. Even when explicitly told not to use AI, 25-35% of participants still did.

This shows we reach for AI tools almost instinctively now. It's become a default part of how many developers approach problems for a significant part of the developers maybe.

What Actually Works: Two Practical Approaches

The study identified two approaches that worked well for both task completion and comprehension:

Approach 1: Generation-Then-Comprehension: Generate code with AI, then ask follow-up questions to understand what it did.

This group showed strong understanding in their quiz results. The key was not just generating the code but actively engaging with it through questions. They used AI as a learning tool, not just a code generator.

Approach 2: Hybrid Code-Explanation: Ask AI to generate code AND provide explanations in the same response.

These participants spent more time reading, but developed better understanding. The explanation forced them to engage with the concepts rather than just copying the solution. The slower pace actually contributed to better learning outcomes.

The Role of Debugging in Skill Formation

Encountering errors and debugging them plays a crucial role in skill formation. The control group (no AI) hit errors, had to understand why they happened, and learned through fixing them.

I think so far this process can't be fast-tracked. Debugging and incidents are essential parts of becoming a better developer. These experiences build the mental models you need to work effectively with code.

AI can help you explore codebases and libraries during debugging, but it shouldn't replace the process of understanding why something broke. In my experience, throwing a zero-shot prompt at AI about an error is still a dice roll. Sometimes it works, sometimes it doesn't.

Few-shot prompting with specific context, possible root causes, and hints about the codebase helps. But you need to understand the problem first to provide that context.

Implications for Engineering Leaders

If your developers rely heavily on AI for code generation, you need processes that ensure they understand what's being generated.

During incidents or debugging sessions under pressure, developers need the skills to validate and debug code quickly. If they've been primarily copying AI-generated code without understanding it, they won't have built those mental models.

This means thinking about:

- How do we ensure learning happens

alongside productivity gains?

- What code review processes help

developers understand AI-generated code?

- How do we create space

for debugging and error-handling practice?

- What onboarding

approaches help new developers build foundational skills?