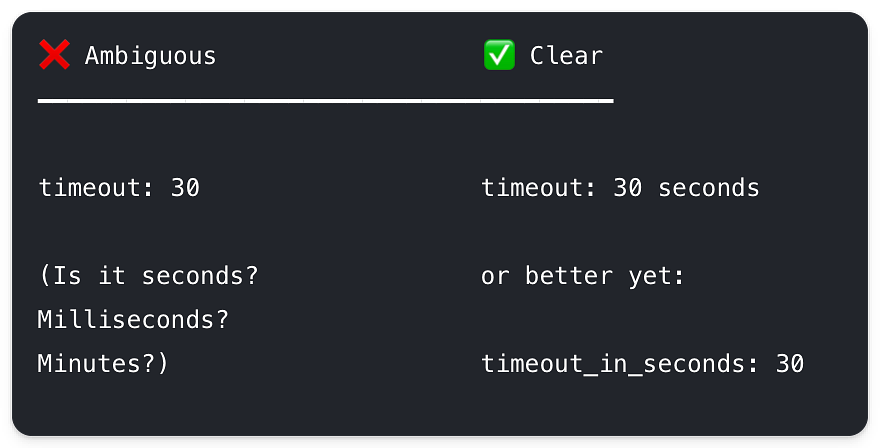

If you're writing API documentation, always specify units for duration parameters.

If you're writing API documentation, always specify units for duration parameters.

Not just "timeout: 30" but "timeout: 30 seconds" or better yet name the parameter if you can "timeout_in_seconds"

This is even more important inside your own codebase. Make sure that variables have proper names or descriptions or comments that specify the unit of measurements moreso in case you are working with durations.

It matters for both developers and LLMs.

When the unit is ambiguous, the LLM guesses. Sometimes it guesses wrong. Then developers debug code they didn't write, hunting for a bug that shouldn't exist.

Clear documentation prevents bugs before they happen.